For more writing, see my blog Bits of Wonder.

A few months ago I very randomly stumbled upon this book:

It’s effectively a compilation of 100 mini-biographies of the most influential scientists in history. Here are my notes and reflections from reading it.

Being a revolutionary requires taking serious personal risks.

Everyone wants to be a revolutionary but no one wants to embarrass themselves. Historically, making claims that ran counter to received wisdom could land you in serious trouble—we’ve all heard about how Galileo was sentenced to house arrest for arguing that the earth orbits the sun. Even today though, in the absence of censorious Catholic Church, there are real social costs to advocating unconventional theories. It’s not so much that you’ll be put under house arrest, but people (your colleagues in science, superiors, and funding instutitions) will certainly give you a funny look, and e.g. pass you up for research grants or tenure. I recently learned of an amusing anecdote along these lines from Michael Levin, who has been doing paradigm-shifting work on bioelectricity. Levin’s proposals for an early experiment—to modulate the membrane potential of cells in order to intervene in development—was considered so ludicrous at the time that one researcher thought he had gone insane, and emailed Levin’s supervisor to warn him as such. 1

The trouble for all of us is that what counts as “ordinary common sense” versus “psychotically absurd” is highly dependent on cultural (and academic) context. There are many things we accept as ordinary statements about reality today which in previous centuries were considered totally preposterous. Like the idea that life started out with self-replicating molecules, and that by virtue of random changes in these self-replicating molecules, you incrementally went from single-celled organisms to plants and animals and eventually ourselves. Or the idea that whether two events happened simultaneously is relative, and that moving more quickly makes time slow down.

What I found interesting is that what often happens in the course of such revolutions is that many more scientists are quietly convinced of a theory than the number that publicly defend it. In the time of Galileo, for example, there were many who secretly agreed with him, like Christopher Grienberger:

A correspondent of Galilei, Grienberger was a strong supporter of the Copernican system and offers a good example of the dilemma of Jesuit scientists. He was convinced of the correctness of Galilei’s heliocentric teachings as well as the mistakes in Aristotle’s doctrines on motion. But because of the rigid decree of his Jesuit superior general, Claudius Aquaviva, obliging Jesuits to teach only Aristotelian physics, he was unable to openly teach the Copernican theory.

What are the revolutions of today?

The look at past paradigm shifts in science begs the question: what are the theories that are considered absurd or fringe today, that most scientists would be embarrassed to defend, but which we’ll eventually look back on as the obvious truth? Again, you can’t know for sure, otherwise winning a Nobel prize would be easy. But two ideas that to me seem like they are slowly gaining ground among academics are (1) panpsychism (or idealism) within philosophy/studies of consciousness and (2) opposition to the gene-centric view of biology:

-

Panpsychism, in the broadest sense, is just the view that consciousness is fundamental to reality, and is not just a byproduct of specific forms of neural activity. It’s the view that, rather than starting with the primitive of “non-conscious material”, our basic picture of reality should start with conscious experience. (See Phillip Goff for a good argument.) It’s a view that, as far as I can tell, a growing number of philosophers/scientists believe, but it’s embarrassing to argue for because it sounds absurd or woo-woo. (And of course many people still think it’s outlandish, e.g. Anil Seth.)

-

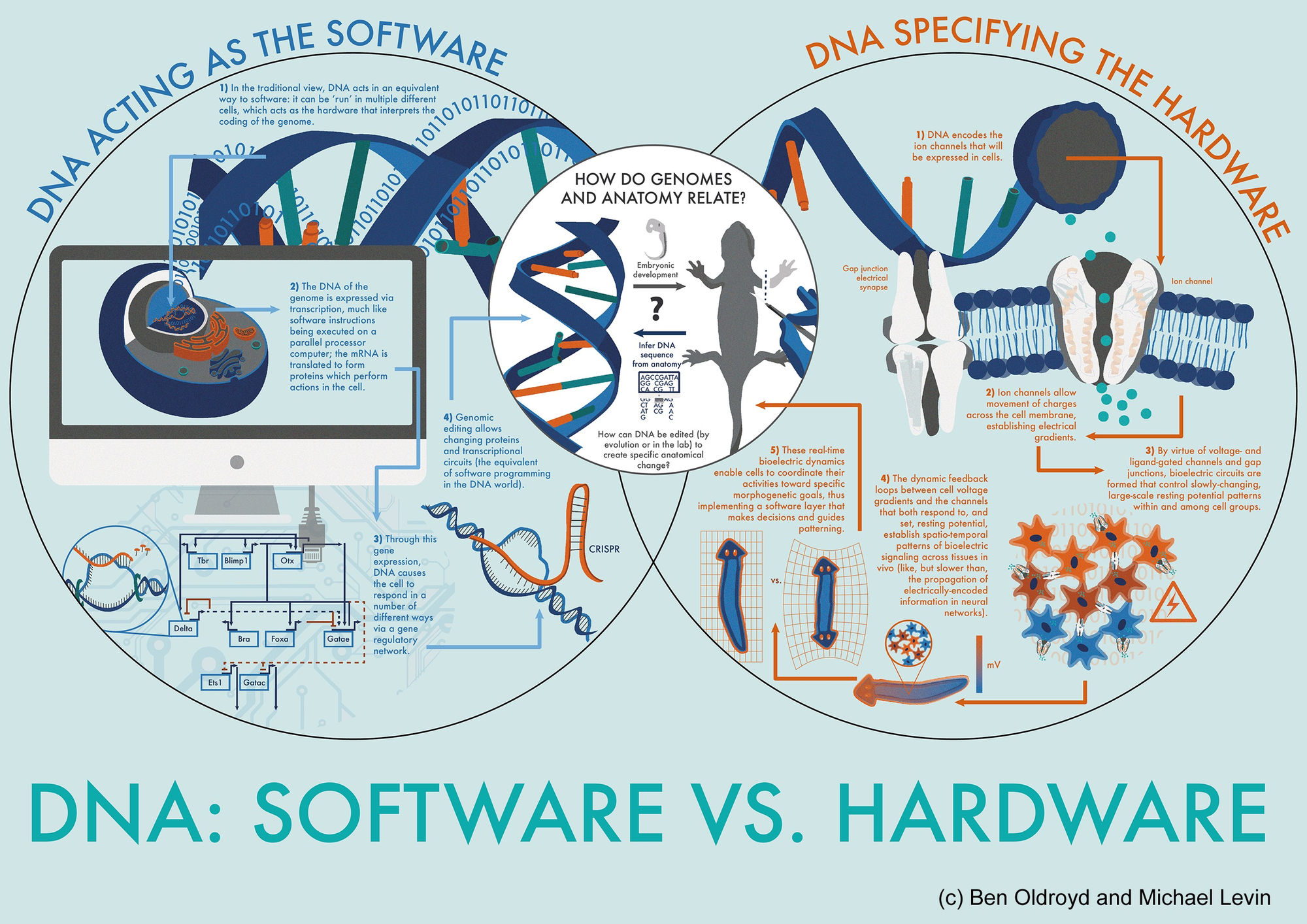

The opposition to the gene-centric view of biology is probably best articulated by Denis Noble, and I think his arguments are partly vindicated in the work of Michael Levin on bioelectricity. Levin argues that bioelectricity influences behavior over and above the genes, i.e. that there are meaningful levers of causal control that override genetic influences. Alfonso Martinez Arias has a similar view. My impression is that the field is slowly coming around to this view, running against the grain of Richard Dawkins’s The Selfish Gene. See this diagram from Levin, which distinguishes the traditional view—genes acting as the software—from the newer view that genes specify the hardware, but the “software” that dictates morphology lies in the bioelectrical activity of the cell.

Revolutions, up close, are incremental

The closer you look at revolutions in the history of science, the more incremental they seem. This is because convincing an entire community of academics of a theory takes time, usually decades at least. Both of the ongoing (and admittedly still contentious) revolutions mentioned above have been playing out over several decades (Levin’s most significant work in bioelectricity was starting in the 1990’s and 2000’s, and you could argue the seeds of the opposition to materialism were planted in David Chalmers’s 1995 paper.) Going further back, both Newton and Einstein are widely regarded as having revolutionized physics, and they were certainly geniuses in their own respects, but when you look closely both of them could be described as merely extending the work of their predecessors and collaborators. Take, for example, Newton’s law of gravity:

By the 1680s, developing from the ideas of Galileo, Robert Hooke, Christopher Wren, and Edmond Halley, members of the newly established Royal Society, had got as far as speculating that gravity obeys an inverse square law, although they could not prove that this was the only possible explanation of the orbits of the planets around the Sun. It was Newton who put all of the pieces together, added his own insights, and came up with his idea of a universal inverse square law of gravitation, complete with a mathematical proof of its importance for orbits. But where would he have been without the others? And I haven’t even mentioned the person usually cited, correctly, as a profound influence on Newton - Johannes Kepler, who discovered the laws of planetary motion!

And also see Poincaré’s work anticipating the discovery of special relativity by Einstein:

[Poincaré’s] interest in this topic – which, he showed, seemed to contradict Newton’s laws of mechanics – led him to write a paper in 1905 on the motion of the electron. This paper, and others of his at this time, came close to anticipating the discovery by Albert Einstein, in the same year, of the theory of special relativity.

Progress often involves reviving old theories with new evidence.

This has happened multiple times in the history of science:

- Alfred Wegener came up with the theory of “continental drift”—claiming that the continents were originally next to each other and have moved apart over time—in the 1930’s, and it was rejected by most geologists in his lifetime. Then, decades later, his work was revived and formed part of our theory of plate tectonics.

- Gregor Mendel’s work on heredity was not widely recognized until, after his death, William Bateson integrated it with the theory of genetics to bring Mendelian heredity into the mainstream.

Which again brings us to the question: who are the underrated intellectuals of the past whose work we are now rediscovering and re-popularizing?

- Denis Noble describes Conrad Waddington as a “systems biologist well before that term became more popular”, who foresaw epigenetic phenomena that we were only able to study empirically decades later. Waddington’s book, The Strategy of the Genes, was recently republished after it was originally published in 1957.2

- David Deutsch’s books on knowledge and rationality (The Beginning of Infinity in particular) have brought renewed public attention to the philosophy of Karl Popper. Deutsch puts Popper’s idea of error correction—the process of coming up with creative conjectures and then criticizing them in the attempt to falsify them, tentatively holding on to what passes our tests until we come up with a better conjecture—as central to the development of life, science, and civilization itself.3

Many people often converge on the same discovery, and getting credit is a matter of chance/marketing.

The periodic table of elements is generally attributed to Dmitry Mendeleyev, who published a version of it in 1869; however there were at least three other scientists who were independently working on it at the same time:

Both the English industrial chemist John Newlands and the French mineralogist Alexandre Béguyer de Chancourtois realised independently that if the elements are arranged in order of their atomic weight, there is a repeating pattern of chemical properties. Their ideas were published in the first half of the 1860s, when Béguyer was simply ignored while, in contrast, Newlands was savagely criticised for making such a ridiculous suggestion. In 1864, the German chemist and physician Lothar Meyer, unaware of any of this, published a hint at his own version in a textbook, and then developed a full account of the periodic table for a second edition, which did not appear in print until 1870. By which time Mendeleyev had presented his version of the periodic table to the world of chemistry, in complete ignorance of all the work along similar lines going on in England, France, and Germany.

This kind of concurrent discovery, also known as “multiple discovery”, has occurred many times in science. Newton and Leibniz invented calculus at about the same time, and Darwin and Wallace both came up with natural selection independently.

All that said, one of the things that I appreciate about scientists is that unlike other professions, they tend to be quite conscientious about giving others credit. Take, for example, Darwin’s insistence on giving Wallace credit for the theory of natural selection, writing to Wallace that “you ought not to speak of the theory as mine; it is just as much yours as mine.” Arguably though, we haven’t been as good at this in recent history, especially when money has been on the line. See, for example, the lawsuit over CRISPR, and this recent article on the growing tendency to present others’ discoveries as one’s own in biology:

Whilst one can try to provide some sort of justification for not citing key discoveries from several years ago, more disconcerting is the growing habit of airbrushing recent discoveries perhaps to control the narrative over a field as the key to scientific legitimacy and leadership.

Other miscellaneous notes/highlights

-

As science has progressed it has begun to involve greater and greater numbers of humans coordinating together (think: Human Genome Project, Hubble Space Telescope, Large Hadron Collider). Partly as a result of this, science projects are much more objective-oriented than they were 100 years ago. This isn’t necessarily a good thing.

A century ago, the justification for building the 100-inch telescope that Edwin Hubble later used to discover the expansion of the universe was simply to find out more about the universe. In the late twentieth century, the main justification for building and launching the Hubble Space Telescope was determined in advance: the “Hubble Key Project” was formed to measure the expansion rate of the universe and thereby determine its age. By that time, the astronomers would never have got funding if they had simply asked for a big space telescope to explore the universe and find out what it’s like.

-

Aristotle was Pretty Cool, Actually. He gets a lot of flak these days, with some scientists arguing that “in the history of most sciences (physics, chemistry, physiology), the most important stage was when scientists finally abandoned the intuitive-but-useless conceptions that Aristotle left us with.” Reading the book helped me appreciate some of Aristotle’s accomplishments, e.g.

Although Aristotle did not claim to have founded the science of zoology, his detailed observations of a wide variety of organisms were quite without precedent. He—or one of his research assistants—must have been gifted with remarkably acute eyesight, since some of the features of insects that he accurately reports were not again observed until the invention of the microscope in the seventeenth century… In some cases his unlikely stories about rare species of fish were proved accurate many centuries later. At other times he states clearly and fairly a biological problem that took millennia to solve, such as the nature of embryonic development.

-

Many scientists were born to wealth, but not all of them. Money often helps, but some people overcome the constraints of limited resources. Take, for example, the cases of Gauss, Faraday, and Mendel.

Born in Brunswick, Germany, Gauss was the only child of poor parents. He was rare among mathematicians in that he was a calculating prodigy, and he retained the ability to do elaborate calculations in his head for most of his life. Impressed by this ability and by his gift for languages, his teachers and his devoted mother recommended him to the Duke of Brunswick in 1791, who granted him financial assistance to continue his education locally and then to study mathematics at the University of Göttingen from 1795 to 1798. (147)

Faraday was born in the country village of Newington, Surrey, now a part of south London. His father was a blacksmith who had migrated from the north of England earlier in 1791 to look for work. Faraday received only the rudiments of an education, learning to read, write, and cipher in a church Sunday school. At an early age he began to earn money by delivering newspapers for a book dealer and bookbinder, and at the age of 14 he was apprenticed to the man. Unlike the other apprentices, Faraday took the opportunity to read some of the books brought in for rebinding. The article on electricity in the third edition of the Encyclopodia Britannica particularly fascinated him. (159)

Mendel was born to a family with limited means in Heinzendorf, Austria (now Hyncice, Czech Rep.). His academic abilities were recognized by the local priest, who persuaded his parents to send Mendel away to school at the age of 11. As his father’s only son, Mendel was expected to take over the small family farm, but Mendel chose to enter the Altbrünn Monastery as a novitiate of the Augustinian order, where he was given the name Gregor. The move to the monastery took Mendel to Brünn, the capital of Moravia, where for the first time he was freed from the harsh struggle of former years. He was also introduced to a diverse and intellectual community. (191)

Of course, you also have cases of scientists who, by virtue of their wealth, had the time and energy to devote a life to science:

Galton was born near Sparkbrook in Birmingham, England. His parents had planned that he should study medicine, and a tour of medical institutions on the Continent in his teens - an unusual experience for a student of his age - was followed by training in hospitals in Birmingham and London. He attended Trinity College, University of Cambridge, but left without taking a degree and later continued his medical studies in London. But before they were completed, his father died, leaving him “a sufficient fortune to make me independent of the medical profession.” Galton was then free to indulge his craving for travel. Leisurely expeditions in 1845-6 up the Nile with friends and into the Holy Land alone were preliminaries to a carefully organized penetration into unexplored parts of south-western Africa. (188)

-

See this clip from his interview with Curt Jaimungal. The warning came after Levin requested samples from the unnamed researcher for his upcoming experiments as a postdoc. In fairness, Levin says that most of researchers he reached out to were nice and provided him with the samples he requested. ↩︎

-

I should note that Noble’s views are still pretty contentious and far from widely accepted among biologists; see this fun debate between Noble and Dawkins on some of the disagreements. ↩︎

-

I happen to think that Deutsch and Popper are wrong on a few important points, and that Popper is not as underrated as Deutsch makes him out to be. But I’ll leave that for a future post. ↩︎